NVIDIA Goes All Adam Smith - Small Language Models Are Best

Cognitive first principles do not change - focus on a problem to solve it

NVIDIA Goes All Adam Smith - Small Language Models Are Best

This week the ongoing battle between the massive centralized data center team and the distributed, compute where the data is team - took an historic turn.

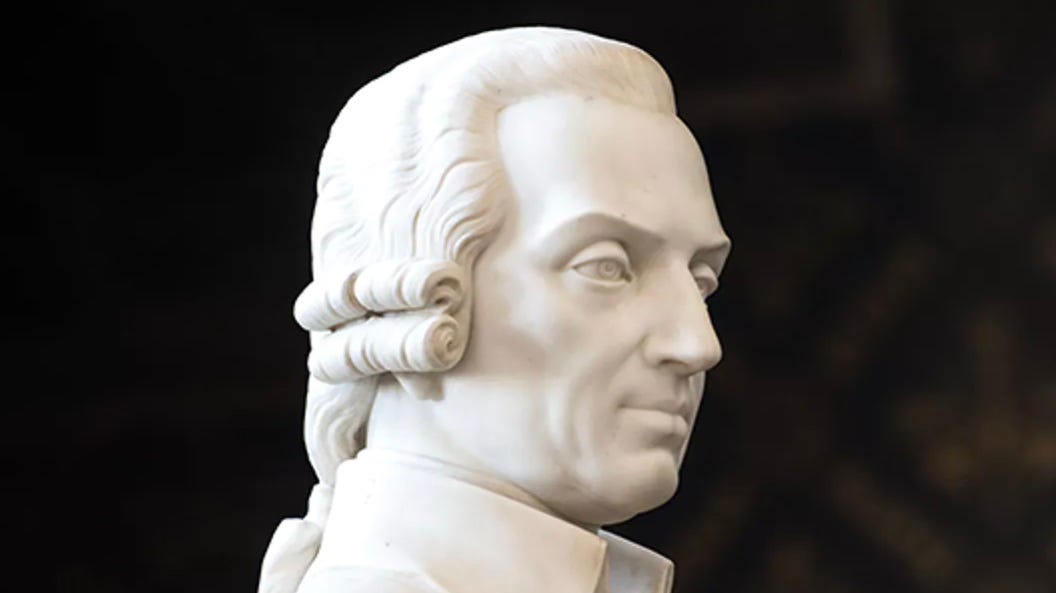

NVIDIA stepped up - going all in on classical economist Adam Smith - specialization is better than dreaming - get to work building Small Language Models.

Most A.I. agents do not need Large Language Models - says NVIDIA.

A.I. agent problems are specific - not general, and specific problems have very constrained domains.

That is why they are called agents - not philosophers.

Of course, agents are specific - because nobody on the planet needs an energy consuming data center - ripping up Virginia farmland, to write haikus or decide the purpose of mankind.

When your governor told you the future of A.I. is large data centers he did not tell you big data centers are needed for Large Language Models - which are needed for UNCONSTRAINED analysis - when every critical A.I agent problem is CONSTRAINED.

When Americans were firing flintlocks in Lexington, Adam Smith published The Wealth of Nations.

Smith made simple, yet stunning - proven - hypotheses:

Dividing labor into simple, repeatable tasks is a “division of labor, and it is the foundational principle of classical economics.

We are learning it may also be a first principle in human and machine decisioning.

NVIDIA observed that A.I. agents are specific, employ constrained models addressing identified problems.

A.I. agents are a better fit for small language models - which are kind of like dividing up the A.I. labor.

Division of labor demands applying constraints to a task.

Adam Smith used the example of a pin manufacturer.

If one guy makes the entire pin (today’s LLM), he can make a fraction of pins made by a team where everyone performs a SPECIFIC TASK.

Divide the problem into its individual components - division of labor - everything changes.

· Workers become more skilled at each task.

· Workers work faster, producing more pins, because they do not waste time moving around among tasks.

· Specialization creates innovation - innovation creates new tools and methods - and more pins are manufactured.

NVIDIA made almost the identical observations with Small Language Models (SLM) versus Large Language Models.

Since the Fractal team is steeped in economics as well as computer science, we relished the article. HERE

Let’s review the parallels between an old white guy 250 years ago and the current cutting-edge technology.

The fundamental difference between LLMs and SLMs is the SLM is task oriented - the LLM is a model of about everything.

When one looks at the A.I. problems companies and the government deal with today, few, if any need an LLM.

That’s not just us saying it, NVIDIA makes the same case.

Each task is a constrained problem - constrained problems need a constrained model - an SLM - addressed by an agent.

Let’s take an example - one everyone can understand.

Your spouse wants BRAVO on the streaming channel.

BRAVO is available via HULU - and one or two less well-known streamers - and HULU is $104 a month.

Most of the HULU stuff is Disney and nobody in your house watches cartoons, so why are you paying $104 for HULU, when you only want BRAVO?

That is a constrained A.I. problem.

That is a very tangible problem for a frustrated streaming watcher, and they want an answer.

Someone, probably a HULU competitor builds an SLM to deal with this modern-day irritation and it gives the answer one could not find spending hours on the web site of 5 streaming services.

Over time, more people ask this question.

Maybe one guy wants BRAVO and ESPN but not the History Channel.

Here we are dealing with the constrained problems of life - don’t deny it, this is what each of us cares about.

When you go to the A.I. system - and it is an LLM, if it tells you it has no idea how you can get BRAVO cheaper, but you shouldn’t be watching BRAVO anyway you should be learning a new language - you will scream into the phone.

As the NVIDIA piece notes “…..you do not need open-domain brilliance, you need an answer that matters.”

A.I. agents provide answers that matter - now.

A.I. agent models are there to solve very specific problems or questions.

When the system can identify from a caller’s voice - their native language - which is not English, and route them instantly to a native speaker - that solves a problem and ONLY that one problem.

NVIDIA and Adam Smith agree on a whole lot more.

Specialization enables innovation.

When you are solving a small problem, like figuring out how much you need to cough up to the Federal government for that 401(k) after age 65 Schwab or Fidelity can give you a quick A.I. agent answer.

Those rules change constantly - and using small language models, which aggregate - the domain experts can focus on only the model for the 401(k), quickly adapt its rules which then tie to the other small models.

Adam Smith called it dexterity - the A.I. guys from NVIDIA point out the “unused parameters” in a large model - which Schwab pays for yet are of zero value to the customer’s problem.

You would think the geekdom who went through object-oriented programming in college - disaggregating pieces of programs, so they could be treated as entities, then put together as building blocks to create a system - would not need Adam Smith to tell them small pieces, tied together are better than a great big….

NVIDIA reported that a 70B LLM model costs from 10X to 30X more than a 7B model - when measured in energy consumption, latency, infrastructure.

The letter B stands for the amount of training data involved.

There is the magic word - energy.

LLMs are energy black holes - causing the madness of big data centers each of which consumes half a million gallons of water a day. If LLMs are not needed for agentic A.I. why the data center madness?

Learn more about that at TheSustainableComputingInitiative.com

The Fractal team, if you follow us on this Substack adds that unlike NVIDIA, these models can be run in parallel - with many SLMs being aggregated into a MESH, operating without a data center and operating faster than any computer available today.

NVIDIA did not include that gem in their piece so we added it.

One of the benefits of the SLM approach for agentic A.I., according to the NVIDIA team - and we agree - is the small language model can be fine-tuned from the data.

NVIDIA explained it as small models, working together - generating training data making the system tighter, cheaper and using less energy.

Adam Smith called this innovation - but specialization pretty much always yields this benefit, let’s look.

A small language model operates against a domain - a data model of all the stuff relevant to its world. Each operation yields answers - and those answers are unlikely to be perfect Day 1.

So the application builders “tune” the small language model - to make it reactive to its own data.

If an agent is providing an instruction that is inaccurate, it’s possible to find why, make the change and use the model’s predictions, measured against results to modify the model.

It is improbable to impossible to do this with a large language model which is, by definition, all things to all people.

Rather than take you through all the parallels with Adam Smith and NVIDIA - we take the principle that specialization is a first principle of cognition. That’s our opinion and it looks like NVIDIA certainly agrees it is such in A.I.

NVIDIA makes another critical observation - one easily overlooked.

Small language models make computing where the data resides more feasible. A short sentence opening an entire world of technological disruption.

Computing where the data resides, like on a backpack on a battlefield avoids sending data to Amazon and waiting for a customer service rep to respond – it is becoming a thing.

While edge computing never took off, because edge companies think the edge is a data center on every corner - drone warfare makes the military types understand fast the days of centralized compute are over.

NVIDIA makes the compelling case SLMs can bring agentic A.I. to where the problem may be, and there is unlikely to be a data center on the corner.

So why are so many people all crazy fired up on LLMs?

Aha, our favorite subject where we claim industry expertise - and we can prove it every day.

The tech market is “addicted to centralized” compute, with all data in one great big data center, with APIs among the components. That’s in the article, not Fractal but we have been saying that for a long time.

How did the tech market get here?

A.I. showed up in the last 24 months - to the masses - and immediately became the must have for everyone.

Almost-half-a-century-old software companies like Oracle, with old, I/O wait state intensive technology, and others like Palantir - had to go A.I. or go home.

When you carry a multi-billion-dollar market cap, nobody’s going home so obsolete technology companies went all in on A.I. - via marketing, not with actual new, nimble A.I. technology.

They told the world it needed massive data centers to run large language models. Of course they did, Oracle and Palantir need a data center, A.I. does not.

The LLM vs. SLM battle started with obsolete, high I/O latent wait state software companies struggling to stay relevant - claiming A.I. needed data centers the size of Manhattan.

Like all technology cycles, over time, as more problems are addressed by a new tech like A.I. more insightful heads bring insight.

NVIDIA this week opened this door a little wider that agentic A.I. - which is most of A.I. does not need large language models.

Fractal proves every day any existing data center can be more productive by a factor of 1,000 without the commensurate energy usage - using I/O wait state-reducing technology.

Follow Fractal here on Substack as we demonstrate with live customers that A.I. does not need data centers, or ancient technology software companies.

FractalComputing Substack is a newsletter about the journey of taking a massively disruptive technology to market. We envision a book about our journey so each post is a way to capture some fun events.

Subscribe at FractalComputing.Substack.com

Fractal Website: Fractal-Computing.com

Fractal Utility Site: TheFractalUtility.com

Fractal Government Site: TheFractalGovernment.com

Fractal Sustainable Computing: TheSustainableComputinginitiative.com

@FractalCompute

Portions of our revenue are given to animal rescue charities.

Jay Valentine: bringing change to this fallen world one mind at a time. Rock on, Mister V.